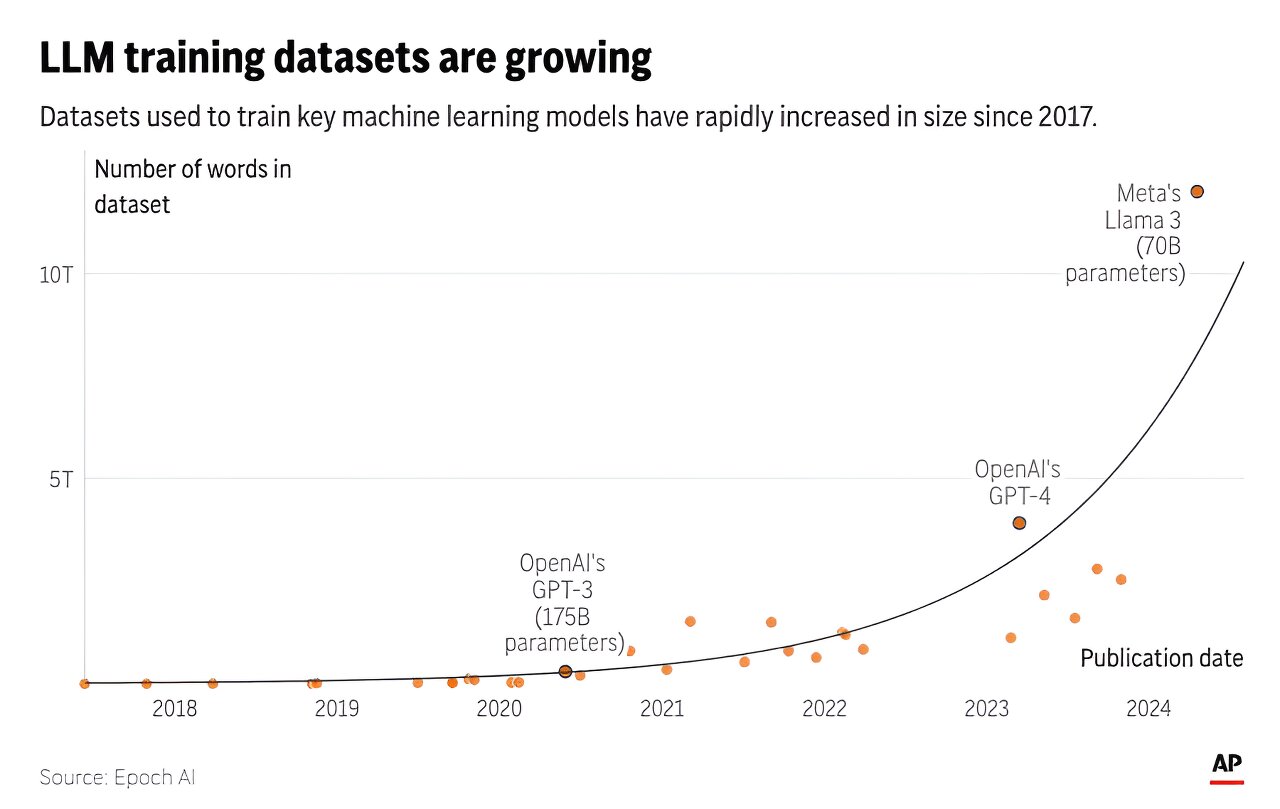

The meteoric rise of AI chatbots like ChatGPT feels almost miraculous – an explosion of capabilities that once seemed decidedly sci-fi. But behind the scenes, a very real dilemma is starting to emerge. The technology powering systems like ChatGPT relies on training the AI models on staggering amounts of human-written text data scraped from the internet. And according to new research, we may be rapidly depleting that very resource that kicked off the AI gold rush in the first place.

A study released this week by the non-profit Epoch AI makes a startling projection – tech companies hoovering up online text to train their large language models could exhaust the public supply sometime between 2026 and 2032. It’s a sobering forecast that hints at the limits of an approach that has driven AI’s breakneck progress over the past decade.

“There is definitely a bottleneck on the horizon in terms of the amount of quality data available to continue scaling up these models,” explains Tamay Besiroglu, a researcher at Epoch and co-author of the study. “And scaling has been the primary driver of their rapidly improving capabilities.”

The situation highlights the almost literal “gold rush” mentality now gripping the AI field. Companies like OpenAI and Google are scrambling to lock down premium data streams – cutting deals with sources like Reddit, online publishers, and more. It’s a land grab to fortify their language models with high-quality writing before the well runs dry.

But that short-term frenzy only underscores the existential bind in the longer term. “Once you’ve exhausted all those public sources, then what?” says Besiroglu. “The options get more ethically and legally fraught from there.”

Potential alternatives like scraping personal communications or using AI-generated synthetic data have major downsides. That could put a ceiling on just how advanced these language models can ultimately become based on current techniques. Some experts envision an AI winter 2.0 hitting if we hit those limitations.

Of course, the timeframe is still uncertain and new AI training breakthroughs could extend the runway. But the writing may already be on the wall, so to speak. Besiroglu notes that over a decade ago, AI researchers realized combining modern computing power with the internet’s riches of human-written text was a potent formula. Now that equation could be breaking down.

“We’ve been in this amazing period of advances enabled by that big data paradigm,” Besiroglu says. “But like any finite resource, those easy-to-access text corpora are going to eventually run out. Then the real challenge begins for sustaining the current trajectory.”

The study offers a glimpse at AI’s Achilles heel in its present form. For all the mind-boggling capabilities that make ChatGPT seem intelligent, it is still ultimately an ultra-sophisticated storehouse of human knowledge. Its genius is in recombining our words in novel ways. At some point, even that well will have been tapped. And then AI may need to evolve in more profound, uncertain ways – or risk calcifying if it cannot.